Table of Contents

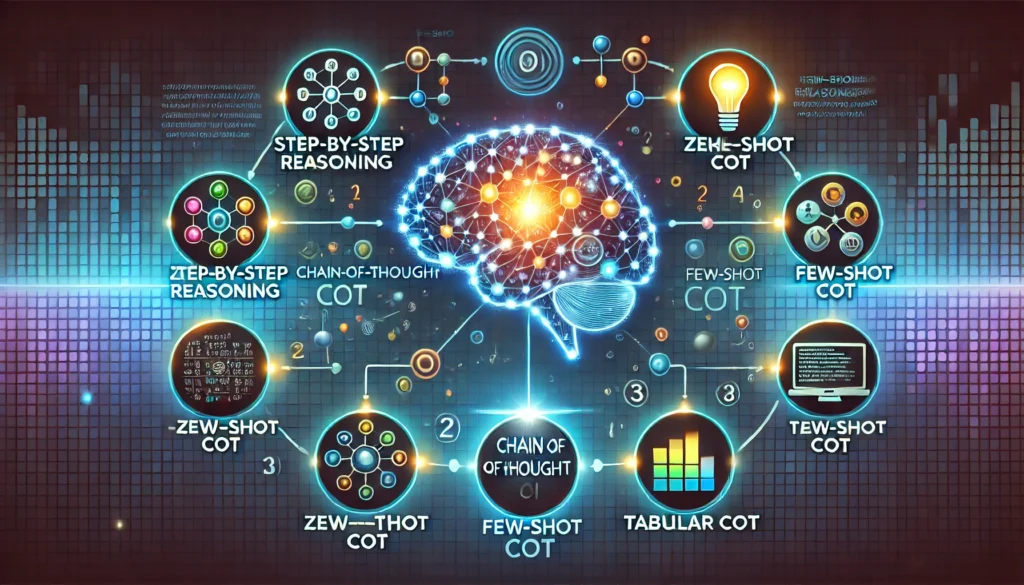

What is a brief process of thought?

An overall methodology used in man-made perception (man-made information) to control the critical thinking and critical skills to take into account vast language models (LLMs) is the chain of thought (Bunk) short. It started a project over time allowing the model to isolate tricky issues into more modest, logical advancements. These qualifiers are used for traditional prompts that expect a single, clear response.

The way human beings approach decisive thought is what makes it bed-inducing. For instance, we could openly cycle or scribble down midway walks before arriving to the final solution while handling a number statement or searching for an option. Bed signals help artificial thinking models perform better on attempts that call for multi-step acknowledgement, legitimate reasoning, or number modification by repeating this cycle.

In categories like science, programming, and courses where the plan depends on logical groupings rather than a thorough examination, bed-affecting is very helpful. With Bed, artificial thinking models can produce more clear, regular, and intelligible outcomes, reducing errors occasionally related to a “secret” in standard prompting methods.

Bunk addresses the brave concept of PC-based information structures and provides indications into their thought processes by enabling a model to “verbally process,” which makes them easier for individuals to comprehend and less tricky to trust.

What is TCS’s Chain-of-Provocative indicating?

In Technological Consultancy Associations (TCS), chain-of-provocative is used to optimize work procedures, modernize conclusive thinking, and influence man-made mental ability-driven unique cycles. As a general messenger in IT associations, TCS uses modern facilities artificial intelligence procedures like Bunk to aid make various business areas feasible, such as client support, programming improvement, and board proposals.

When attempting to simulate human-like thinking when keeping an eye out for challenging circumstances, TCS uses chain-of-provocative to create repeating information frameworks. For example, project managers and Bunk impacting partners use duplicate information devices to evaluate potential results, allocate resources, and change plans by gradually looking at associated attempts. Additionally, Bunk-composed artificial intelligence can assess and respond to customer queries by methodically splitting them, ensuring more rapid and more exact reactions.

Another benefit is that TCS uses bed bedding in farsighted assessment, where real information is broken down into figure models or the results by artificial intelligence models. Bunk ensures fantastic data snippets that are accurate and fairly appropriate by breaking those efforts down into logical tactics. Through its adoption, TCS displays how PC-based understanding-driven advancements like as Bed may foster connections to achieve utilitarian relevance, alter customer interactions, and foster a continuous improvement culture.

What is Gen AI’s Chain-of-Provocative?

Chain-of-provocative believes a key role in recovering the models’ creative limitations and way of thinking in generative recreated information (Gen PC based information). Gen PC-based understanding frameworks, like as OpenAI’s GPT models, rely on Bed to handle tasks that call for critical thinking, such as writing papers, solving puzzles, or coming up with original ideas.

Bunk impelling, for instance, uses the information that has been replicated to advance toward the record structure, strengthen characters, and make conventional plotlines go each tiny step closer to their turn. Similarly, by recording consistent or accessible inquiries, the model can make moderate progress by comprehending the thinking process and strengthening the final response.

Gen man-made information benefits from Bed actuating by extra making execution on assignments that request multi-step thinking. Rather than making a speedy reaction, the man-made information moves toward its thinking, perceives sub-undertakings, and sometimes connects the results to create a broad plan. This improves accuracy much more and enables clients to understand the man-came-to-awareness’s significant conclusion of view. Gen PC-based information designs can convey subtle, outstanding results that are consistent with human presumptions by adopting Bunk prompting. By stretching the boundaries of generative PC-based knowledge, this method keeps creativity and consistency in mind while preparing it to handle challenging certified challenges.

What is the Chain-of-Provocative in IBM?

In order to control any negative effects pertaining to pecking away at thinking, IBM organizes a series of figures inducing into its resembled information, restructures its path, and tackles complicated effort problems with the environment. Like Watson, IBM places a strong focus on PC-based information drives, which address how Bed might be used in experiences like clinical thought, money, and store platforms.

For example, IBM uses bed actuating to break down healthcare records, expressive information, and calm eventual results in clinical advantages. In order to supervise and demonstrate accurate disclosures and treatment programs, the man-made understanding uses a methodical approach. Bunk affecting is also used in finance to evaluate risk factors, do consistency checks, and provide data snippets supporting assumptions.

Additionally, IBM employs Bed prompting in its remote colleagues and PC-based information-driven chatbots. These designs can communicate more definitive and cautious replies by isolating customer feedback about reasonable portions, further solidifying consumer appropriateness and steadfastness.

IBM’s man-caused thinking strategies became more clear-cut and comprehensible through chain-of-thought actuation, which is essential for massive business-level social events. This viewpoint is consistent with IBM’s responsibilities to provide honest artificial awareness frameworks that empower relationships to make conviction-driven decisions based on comprehension.

What is the difference in skill between thought-provoking and shot-prompting?

Although they address different needs, two explicit frameworks employed to work on the presentation of PC-based information models are chain-of-interesting and barely any shot prompting.

Providing the PC-based information with a few models (shots) to arrange appreciation of its unity is rarely typically a shot-provoking integration that turns into an effort. These models illustrate the approach, manner, or environment that electronic thinking ought to conform to. A few examples of understanding, for instance, can improve the model’s performance and summarization if the task is to unwind text.

Chain-of-thought motivating, on the other hand, depends about separating intricate endeavours into realistic improvements. In any event, it does not rely on consultants to increase capacity with the assignment structure; instead, it instructs the model to “think” gradually before providing the final result. This is especially true for tasks that call for multi-step reasoning, such as handling numerical announcements or segregating flexible conditions.

While few-shot impelling is more effective for advancing the comprehension model and translating task-express models, chain-of-provocative is more suited for further fostering interpretability and creative thinking. By combining the strengths of both systems, it often becomes possible to achieve staggeringly common results.

What do Chains Contemplations Mean?

The definition of “chain of thoughts” suggests a communal gathering of connected reflections, quick discoveries, or intellectual processes that conclude. It is a well-known method of reaching straightforward thinking, in which one concept leads to another in a verifiable chain of events.

When determining what to make for dinner, for instance, a series of evaluations can involve focusing on open decorations, considering food preferences, and researching time management. This methodical approach brings out the process and ensures that the final choice aligns with every pertinent component.

The chain-of-thought idea enables machines to carry out this human-like cycle of thinking in artificial intelligence. Instead of making snap decisions, mechanized thinking models consider improved accuracy and simplicity while framing minor advancements. This framework is particularly useful for attempts that call for authentic applications. By reversing the sequence of viewpoints, and man-made thought. evaluation, for example, Man-made knowledge designs are higher and more appealing by reflecting the chain-of-point of view, overcoming any conflicts between human and machine thinking. solving puzzles or forming predictions.

By making zero-shot difficult Man-made thinking models, such as bunk prompting, also improved interpretability, decreased clutter, and increased versatility—even under unusual conditions.

Zero-Shot Chain-of-Thought Prompting: What Is It.

A straightforward level reenacted information approach known as “zero-shot chain-of-thought inciting” allows a model to reason eventually without relying on previous models or planning for a specific task. The model is often impacted to “verbally cycle” and configure its thought association from the initial appearance to the final reaction.

For clarification on the query, “What has scratches yet can’t open locks?” for example, a model may be brought closer. It could initially understand the picture, consider possible reactions, and conclude with “a piano.” The bit by bit hypothesis ensures a more transparent and careful conclusion.

Zero-shot The fact that bed inciting doesn’t require much planning or coordinated datasets makes it a very solid field. Bringing everything into account, it relies on the distinctively deepest regions of massive language models to generate thought pathways based solely on the brief. This makes it all-around versatile for handling a range of tasks, from logging requests to handling problems that call for clear-cut logic.

Prompts are courses or data sources utilized for man-made information that help models deal with performing. Three basic categories of prompts exist:

- Zero-Shot Prompting: An endeavour with almost no previous models or settings is provided to the model. Its ability to elicit a response depends only on its setup data. Although capable, it might not always produce precise results for difficult jobs.

- Few-Shot Prompting: a few suggestions are provided to the model to help them become comfortable with the environment or work plan. This method further simplifies execution by providing the most effective approach to action, especially for tasks like plans, structures, or interpretations.

- Chain-of-Thought Prompting: The methodology is designed to logically go through a task step by step. This is particularly useful for multi-step problems since it ensures that the outcome of the replicated information is anticipated, consistent, and simple.